Prinicpal Investigator: Skubic

Co-Investigators: CarlsonAgency: NSF

Project Summary

Motivation

When people communicate with each other about spatially oriented tasks, they more often use qualitative spatial references rather than precise quantitative terms, for example, “Your eyeglasses are behind the lamp on the table to the left of the bed in the bedroom.” Although natural for people, such qualitative references are problematic for robots that “think” in terms of mathematical expressions and numbers. Yet, providing robots with the ability to understand and communicate with these spatial references has great potential for creating a more natural interface mechanism for robot users. This would allow users to interact with a robot much as they would with another human, and is especially critical if robots are to provide assistive capabilities in unstructured environments occupied by people.

Proposed Research

The proposed project will bring together an interdisciplinary team from Notre Dame and the University of Missouri, leveraging previous NSF-funded work. Within the discipline of Psychology, Carlson has studied the human understanding of spatial terms. Within Computer Science and Engineering, Skubic has investigated spatial referencing algorithms and spatial language for human-robot interfaces. We propose to leverage this independent prior work to further a joint effort towards a deeper understanding of human spatial language and the creation of human-like 3D spatial language interfaces for robots. Human subject experiments will be conducted with college students and elderly participants to explore spatial descriptions in a fetch task; results will drive the development of robot algorithms, which will be evaluated using a similar set of assessment experiments in virtual and physical environments.

Project Objectives:

- To empirically capture and characterize the key components of spatial descriptions that indicate the location of a target object in a 3D immersive task embedded in an eldercare scenario, focusing particularly on the integration of spatial language with reference frames, reference object features, complexity, and speaker/addressee assumptions.

- To develop and refine algorithms that enable the robot to produce and comprehend descriptions containing these empirically determined key components within this scenario.

- To assess and validate the robot spatial language algorithms in virtual and physical environments.

Project Highlights:

- An experiment was conducted to capture natural spatial language directives from both younger adults and older adults, creating a corpus of 1024 commands. From these, 149 commands were extracted for a template corpus capturing the different language structures observed. We found significant differences in the spatial language used by older adults vs. younger adults.

- Furniture items were used as reference objects in the fetch task. To support this, we developed a furniture recognition system based on RBG-Depth images. We also recognize the orientation of furniture to support descriptions with intrinsic references, e.g., the front of the chair.

- A natural language processing system parses the spatial language directives and extracts a tree structure of language chunks. The tree structure is then grounded to a robot navigation instruction in the form of a sequence of actions based on spatial references to furniture and room structure; the best navigation instruction is selected by scoring.

- The Reference-Direction-Target (RDT) model is proposed to represent indoor robot actions. To control the robot for the fetch task, a behavior model has been designed based on the RDT model.

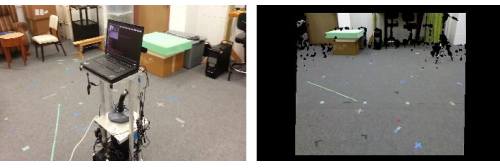

- An assistive robot has been designed and programmed based on this system. The proposed spatial language grounding model and robot behavior model have been tested experimentally in three sets of experiments in robot fetching tasks with a success rate of around 80%. The results show that the system enables a robot to follow spatial language commands in a physical indoor environment even if the referenced furniture items are re-positioned.